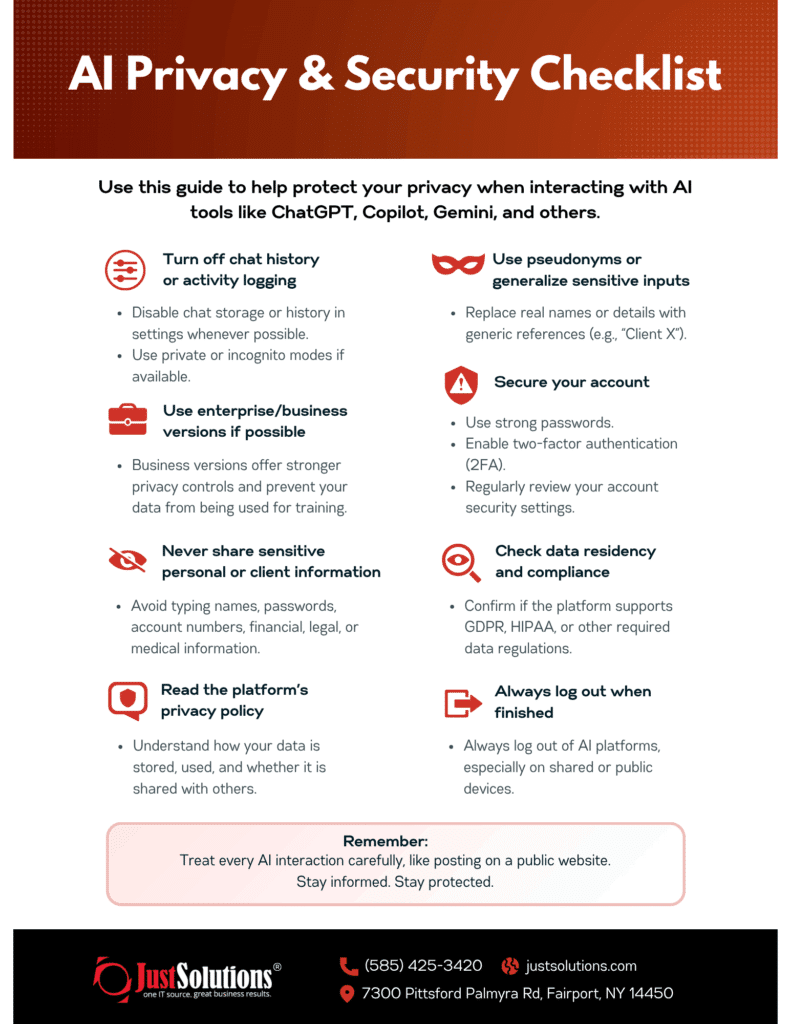

From Copilot to ChatGPT, AI tools are becoming part of the daily workflow for a lot of businesses. If you’re experimenting with AI or rolling it out to your team, you need to do it securely. Below is an AI privacy and security checklist, you can use to make sure you’re protecting your data, your clients, and your company’s reputation while still getting the most from AI.

How to Use AI Securely

Watch Our Recent Webinar: How Businesses Can Thrive with Artificial Intelligence

#1 Turn Off Chat History or Activity Logging

Many AI platforms (like ChatGPT and Gemini) store your prompts by default, and in the past, some even used that data to train their models. That raised serious red flags, especially for businesses.

Most platforms now allow you to disable chat history or use private modes. In fact, as mentioned in our recent webinar, ChatGPT has revised its data policies 11 times in the last two years to respond to concerns. Microsoft’s Copilot, by design, never uses your business data to train its foundation models.

Best practice: Always check the settings and turn off history or data logging, especially when using free tools.

#2 Use Enterprise or Business Versions

Free versions of AI platforms might be fine for personal use, but they’re not built for business security.

If you’re serious about using AI for your business, don’t use the free versions. Paid plans, especially enterprise ones, let you isolate data, control retention, and even prevent exfiltration of sensitive content.

Microsoft’s Copilot is highlighted for its security-focused features: searchable logs, export restrictions, and integration with company-wide security settings. Other platforms are following suit, but often only in their premium offerings.

#3 Don’t Share Sensitive Personal or Client Information

Even with chat history off, best practice is to never enter names, passwords, account numbers, or proprietary information into AI tools.

“Think of it like a public forum,” the webinar explained. “If you wouldn’t post it on your website, don’t type it into a chatbot.”

This includes uploading files, a growing risk area. Users often drag and drop entire documents into chat windows for help analyzing them, but don’t realize what might be embedded in those files.

#4 Read the Platform’s Privacy Policy

This isn’t the fun part, but it matters. Every AI provider has a privacy policy that tells you how they handle your data. This includes, where it’s stored, how long it’s kept, and whether it’s shared.

For example, Gemini collects user data unless you opt out. Meanwhile, Copilot isolates your data by default. Understanding these differences helps you make smarter decisions about which tools your business can safely use.

#5 Use Pseudonyms or Generalize Input

If you’re working through real-world business scenarios, scrub the details first. Instead of saying “Jane from XYZ Corporation,” say “Client A.” AI can still help solve your problem without putting sensitive information at risk. This habit can make a big difference in minimizing exposure.

#6 Keep Your Accounts Secure

You’d be surprised how many people reuse passwords or leave two-factor authentication disabled. If you’re giving your team access to AI tools, make sure they treat those accounts like they would any critical business software.

#7 Understand Data Residency and Compliance

Are your AI tools compliant with regulations like CMMC, HIPAA or GDPR? Where is the data being stored? Is it being transmitted to third parties?

In regulated industries, this matters. As pointed out in the webinar, AI use often flies under the radar of IT teams, especially when employees experiment with tools on their own. This “shadow IT” trend can open the door to serious risks if not addressed.

#8 Always Log Out When You’re Done

It sounds simple, but it’s often overlooked, especially on shared devices. Logging out ensures your previous sessions (and data) aren’t accidentally accessed by someone else.

Set Security Policies Before You Need Them

AI tools are showing up in every department. If you haven’t yet, now’s the time to set clear boundaries.

- Create clear policies around AI usage and security.

- Train your staff on how to use AI responsibly.

- Stick with business-grade tools that give you the controls you need.

Just like we had to put mobile phone policies in place years ago, the same is true with AI.

Want Help Creating AI Usage Policies for Your Business?

AI has a lot to offer, and the benefits are real. More efficient workflows, faster responses, better insights. But those benefits shouldn’t come at the cost of your company’s security.

The businesses getting the most out of AI are the ones setting the right boundaries from the start. Use the checklist above to make sure your team is using these tools smartly and safely.

Just Solutions can help your team safely adopt AI tools without opening the door to compliance headaches or data breaches. Contact us to learn more on how to get started with using AI in your business.